Big Tech: A.I. Can Be Dangerous

(Well, duh!)

Last month, the country’s most prominent technology execs met in a highly unusual closed-door meeting in the U.S. Senate to discuss the advantages and dangers of A.I. and whether and how it should be regulated.

Immediately following the meeting, Senate Majority Leader Chuck Schumer noted that when he polled the entire group, including nearly two dozen tech execs, they unanimously agreed the government should have oversight over Artificial Intelligence. There were no dissenters despite their many other disagreements.

The consensus of the group, which included Elon Musk and Mark Zuckerberg, seems to have been that Congress should do what it can to maximize A.I.’s benefits and minimize the negatives, according to Schumer, “whether that’s enshrining bias, or the loss of jobs, or even the kind of doomsday scenarios that were mentioned in the room. And only the government can be there to put in guardrails.”

In June, the European Union signed the world’s first comprehensive rules. The E.U.’s A.I. Act will govern any product or service that uses an A.I. system and classify them according to four levels of risk, from minimal to unacceptable.

Other executives attending the September meeting at the U.S. Capitol were Meta’s Mark Zuckerberg, former Microsoft CEO Bill Gates, and Google CEO Sundar Pichai, along with other foremost tech leaders and a bipartisan group of U.S. senators.

Musk said the meeting “might go down in history as being very important for the future of civilization.”

In any case, A.I. is here to stay. Hmmm… This writer is still ruminating about what Schumer may have meant by “doomsday scenarios” (yikes!)

You, Robot

In our quest for more information about the rapidly developing world of Artificial Intelligence, it occurred to us that we could go straight to the horse’s mouth, so to speak, or at least to the robot’s mouth. The following is an interview KGRAdb conducted with the A.I. system Chat GPT:

Q: Hello, I would like to ask you a few questions about an article I am writing on artificial intelligence.

A: Of course, I’d be happy to help you with your article on artificial intelligence! Please feel free to ask your questions, and I’ll do my best to provide accurate and informative answers.

Q: Do you have a favorite area of interest?

A: I don’t have personal preferences or emotions, so I don’t have a “favorite” area of interest. However, I’m knowledgeable about a wide range of topics related to artificial intelligence, including machine learning, natural language processing, computer vision, robotics, ethics in A.I., and more. If you have specific questions about any of these areas or others, feel free to ask, and I’ll provide you with the information you need!

Q: Please expand just a bit on the topic of ethics in A.I.

A: Certainly! Ethics in A.I. is a crucial and rapidly evolving field that addresses the moral and societal implications of developing and deploying artificial intelligence systems. As A.I. technologies become more integrated into various aspects of our lives, it’s important to consider the potential ethical challenges and dilemmas they raise. Here are some key points to consider when discussing ethics in A.I.:

Bias and Fairness:

A.I. systems are often trained on large datasets, and if these datasets contain biases, the A.I. can perpetuate those biases in its decisions. Ensuring fairness in A.I. means addressing issues related to race, gender, socioeconomic status, and other factors to prevent discriminatory outcomes.

Transparency and Explainability: Many A.I. algorithms, especially deep learning models, can be complex and difficult to interpret. Ensuring that A.I. systems are transparent and their decisions are explainable is important for accountability, trust, and understanding how decisions are made.

Accountability: When A.I. systems make decisions that have real-world consequences, determining who is accountable for those decisions can be challenging. Establishing clear lines of responsibility and liability for A.I. actions is a complex legal and ethical consideration.

Privacy: A.I. often deals with large amounts of personal data. Protecting user privacy and ensuring that A.I. systems handle data in a responsible and secure manner is essential to maintain public trust.

- Job Disruption and Economic Impact: The automation potential of A.I. raises concerns about job displacement in certain industries. Preparing for the economic impact of A.I. and ensuring that workforce transitions are managed responsibly are important ethical considerations.

- Autonomous Systems: As A.I. systems become more advanced, there’s a growing interest in using them in autonomous vehicles, drones, and other contexts where they can make life-or-death decisions. Ensuring these systems prioritize human safety and adhere to ethical guidelines is paramount.

- Dual Use:I. technologies can be used for both beneficial and harmful purposes. Striking a balance between fostering innovation and preventing the misuse of A.I. is a challenge in the ethical realm.

- Global Considerations:I. ethics isn’t limited to individual countries. The global nature of A.I. development requires international cooperation to establish common ethical standards that can guide the responsible use of A.I. on a global scale.

- Public Engagement: Including diverse voices in A.I. discussions is important to avoid bias and ensure that A.I. technologies are developed with a wide range of perspectives in mind.

- Regulation and Policy: Governments and organizations are grappling with the need for regulations that address the ethical challenges posed by A.I. Developing appropriate policies and regulations that encourage innovation while safeguarding societal well-being is an ongoing process.

Overall, ethics in A.I. is about finding a balance between technological advancement and the well-being of individuals and society as a whole. It involves interdisciplinary collaboration among researchers, policymakers, ethicists, and the public to ensure that A.I. is developed and deployed in ways that align with human values and interests.

Q: Please give us some examples of negative purposes for which A.I. might be used.

A: Certainly. Here are some examples of negative applications of A.I.:

- Deepfake Generation: AI-powered deepfake technology can be used to create highly convincing fake videos and audio recordings of real people. This could be exploited for spreading misinformation, defaming individuals, or even fabricating evidence.

- Cyberattacks and Hacking:I. can be used to develop sophisticated and automated cyberattack tools that can breach security systems, steal sensitive data, disrupt critical infrastructure, and cause widespread damage.

- Automated Social Engineering:I. can analyze vast amounts of personal data to craft highly targeted and persuasive social engineering attacks, tricking individuals into revealing confidential information or engaging in harmful activities.

- Fake News and Disinformation:I. can automate the creation and dissemination of fake news, making it more difficult for people to discern between accurate and false information, which can lead to societal distrust and chaos.

- Surveillance and Privacy Violation: AI-powered surveillance systems can invade individuals’ privacy by tracking their activities, monitoring conversations, and analyzing personal data without consent.

- Autonomous Weapons:I. can be used to develop lethal autonomous weapons systems that can independently identify and engage targets without human intervention, raising concerns about the lack of human control and potential for misuse.

- Stock Market Manipulation:I. algorithms can analyze market trends and execute trades at incredibly high speeds, potentially leading to market manipulation, flash crashes, and financial instability.

- Fraud and Scam Detection Evasion:I. can be used to develop techniques that evade fraud detection systems, making it more difficult to prevent financial fraud and scams.

- Online Harassment and Hate Speech: AI-powered tools can automate the creation and spread of hate speech, harassment, and offensive content online, contributing to a toxic online environment.

- Biased Hiring and Discrimination:I. algorithms used in hiring processes can inadvertently perpetuate biases present in historical data, leading to discriminatory practices in hiring and employment decisions.

- Manipulating Social Media Algorithms:I. can be used to manipulate social media algorithms to amplify divisive content, polarize public opinion, and incite conflict.

- Unethical Use of Autonomous Vehicles: If not properly regulated, A.I. in autonomous vehicles could be used maliciously for actions like intentional collisions or reckless driving.

- Unauthorized Surveillance and Espionage:I. can facilitate unauthorized surveillance and espionage activities, enabling unauthorized access to sensitive information.

- Fake Personalization: AI-generated content can be used to deceive individuals into believing they are interacting with real people or receiving personalized messages when they are not.

Q: Those examples highlight the need for ethical regulations. How can these potential dangers be mitigated?

A: Mitigating the potential dangers of A.I. requires a multi-faceted approach involving technological, regulatory, and ethical measures. Here are some strategies that can be employed to address the negative uses of A.I.:

- Ethical A.I. Development:

- Prioritize ethical considerations from the early stages of A.I. development.

- Implement guidelines and codes of ethics for A.I. researchers, developers, and practitioners.

- Transparency and Explainability:

- Develop A.I. systems that provide understandable explanations for their decisions.

- Implement methods to make A.I. models more transparent and interpretable.

- Bias and Fairness:

- Regularly audit and analyze A.I. systems for biases and take corrective actions.

- Use diverse and representative training data to reduce biased outcomes.

- Regulations and Policies:

- Enforce regulations that define the ethical boundaries of A.I. applications.

- Create international agreements on the responsible use of A.I. technologies.

- Data Privacy and Security:

- Implement strong data protection measures to safeguard user privacy.

- Encourage data minimization and secure data storage practices.

- Human Oversight and Control:

- Ensure that humans have the ability to intervene and override A.I. decisions.

- Avoid the deployment of fully autonomous A.I. systems in critical contexts.

- Education and Awareness:

- Educate the public about the capabilities and limitations of A.I. to reduce the impact of misinformation and manipulation.

- Collaboration and Interdisciplinary Research:

- Foster collaboration among A.I. researchers, ethicists, policymakers, and domain experts to address complex challenges.

- Accountability and Liability:

- Clearly define roles and responsibilities for A.I. system outcomes.

- Establish liability frameworks to hold individuals and organizations accountable for AI-related harms.

- Algorithmic Audits:

- Regularly audit A.I. algorithms to identify unintended consequences or misuse.

- Conduct third-party audits to ensure transparency and fairness.

- Safeguarding Critical Systems:

- Implement strict controls and safety measures for A.I. systems used in critical domains like healthcare, transportation, and energy.

- Public Engagement:

- Include diverse perspectives in A.I. development discussions to identify potential pitfalls and ethical concerns.

- Responsible A.I. Education:

- Educate A.I. practitioners about ethical considerations, responsible development, and potential misuse.

- Emergency Kill Switches:

- Include mechanisms that allow human operators to disable A.I. systems in emergency situations.

- Monitoring and Reporting:

- Continuously monitor A.I. systems for misuse and respond promptly to identified issues.

- Red Teaming:

- Employ red teaming exercises to identify vulnerabilities and potential negative use cases.

- International Cooperation:

- Collaborate on global standards and agreements to ensure ethical A.I. development worldwide.

It’s important to note that addressing the potential dangers of A.I. is an ongoing effort that requires a combination of technical innovation, regulatory frameworks, and societal awareness. As A.I. technology continues to evolve, so too must the strategies and safeguards put in place to ensure its responsible and beneficial use.

Q: By contrast, how might A.I. be used to help close the schism in American society today?

A: A.I. has the potential to play a role in addressing and mitigating societal divisions and challenges in various ways. While it’s not a panacea, here are some ways A.I. could contribute to closing the schism in American society:

- Promoting Informed Discourse:

- AI-powered tools can help fact-check and verify information, enabling citizens to make informed decisions based on accurate data.

- Identifying Common Ground:

- AI-driven sentiment analysis and opinion mining can identify areas of shared concern among different groups, helping to facilitate productive dialogues.

- Customized Education:

- I. can personalize educational content to cater to different learning styles, ensuring equal access to quality education for all individuals.

- Healthcare Accessibility:

- I. can improve healthcare access and outcomes for underserved communities by providing telehealth services, diagnostic support, and health monitoring.

- Targeted Social Services:

- I. can help governments and organizations allocate resources more efficiently to address specific social needs, such as poverty, homelessness, and unemployment.

- Crisis Response:

- AI-powered disaster response systems can improve coordination and resource allocation during natural disasters and emergencies.

- Criminal Justice Reform:

- I. can be used to analyze criminal justice data and identify patterns of bias, leading to more equitable law enforcement and sentencing.

- Predictive Policing Mitigation:

- While cautious implementation is crucial, A.I. can be used to identify areas where crime rates are likely to increase, enabling proactive community engagement and crime prevention.

- Language Translation:

- AI-driven translation tools can facilitate communication between people who speak different languages, breaking down language barriers.

- Economic Empowerment:

- AI-driven job matching platforms can connect individuals with appropriate employment opportunities, reducing unemployment rates.

- Community Building:

- I. can be used to connect people with shared interests, helping to foster relationships and understanding across diverse communities.

- Political Engagement:

- AI-powered platforms can encourage civic participation, providing individuals with information about candidates, policies, and voting processes.

- Access to Legal Information:

- AI-driven legal aid platforms can provide individuals with information about their legal rights, bridging the gap in access to legal knowledge.

- Public Opinion Analysis:

- I. can analyze social media and public sentiment to gauge the concerns and preferences of different groups, aiding policymakers in crafting more inclusive policies.

- Promoting Empathy:

- AI-driven virtual reality experiences can help people understand perspectives different from their own, fostering empathy and understanding.

- News Curation:

- AI-powered news aggregation can provide a more balanced view of current events, presenting diverse viewpoints to users.

- Community Dialogue Platforms:

- I. can facilitate online forums and platforms that encourage respectful discussions and constructive debates on important societal issues.

It’s important to note that A.I.’s impact on societal divisions depends on how it’s designed, implemented, and integrated into various contexts. Careful consideration of ethical guidelines, fairness, transparency, and inclusivity is crucial to ensure that A.I.’s potential benefits are realized in ways that promote unity and understanding.

Q: Should A.I. be regulated, and if so, how?

A: The question of whether A.I. should be regulated and how it should be regulated is a complex and debated topic. Regulation is often seen as a means to balance the potential benefits and risks of A.I., ensuring that technological advancements are harnessed for societal good while minimizing harms. Here are some key points to consider when discussing A.I. regulation:

Benefits of Regulation:

- Ethical Considerations: Regulation can help ensure that A.I. technologies adhere to ethical principles and guidelines, preventing the development of systems that may cause harm or violate human rights.

- Safety and Accountability: Regulations can establish safety standards for A.I. systems, making developers accountable for potential risks and failures. This is particularly important in critical domains like healthcare and autonomous vehicles.

- Fairness and Bias Mitigation: Regulations can require transparency and fairness in A.I. decision-making processes, addressing issues of bias and discrimination in algorithmic outcomes.

- Privacy Protection: Regulations can safeguard user data and privacy, preventing unauthorized use and dissemination of personal information.

- Consumer Protection: Regulations can ensure that A.I. products and services meet certain quality standards and provide accurate information to consumers.

Challenges of Regulation:

- Rapid Technological Change: The fast pace of A.I. development makes it challenging for regulations to keep up with evolving technology.

- Innovation Impact: Overregulation could stifle innovation and hinder the potential benefits of A.I. in various industries.

- Global Consistency:I. is a global field, and regulations might vary between countries, making it difficult to maintain consistency and cooperation.

- Unintended Consequences: Poorly designed regulations could have unintended negative consequences, hampering A.I. development in ways that were not anticipated.

Approaches to Regulation:

- Sector-Specific Regulation: Tailoring regulations to specific industries or applications, such as autonomous vehicles, healthcare, and finance, to address unique challenges.

- Risk-Based Regulation: Implementing regulations based on the potential risks associated with different A.I. applications, ensuring that higher-risk technologies are subject to stricter scrutiny.

- Ethics and Principles Frameworks: Developing high-level ethical principles and guidelines that A.I. developers and users must adhere to, without overly prescriptive rules.

- Transparency Requirements: Requiring A.I. systems to provide explanations for their decisions, increasing accountability and public trust.

- Data Governance: Implementing regulations related to data collection, usage, and sharing to protect privacy and prevent misuse.

Given that A.I. is a global phenomenon, international cooperation is crucial. Developing common ethical standards, sharing best practices, and establishing agreements for cross-border A.I. applications can help address the challenges posed by varying regulatory approaches.

In summary, A.I. regulation is a balancing act between promoting innovation and addressing potential risks. Effective regulation should strike a balance between ensuring the responsible development of A.I. technologies and allowing for continued advancements that benefit society. It requires a comprehensive understanding of the technology, collaboration among stakeholders, and an iterative approach that adapts to the evolving A.I. landscape.

Q: What part might A.I. play in medical advances for treatments of conditions like Parkinsons disease, or spinal injuries, for example?

A: A.I. has the potential to significantly contribute to medical advances and treatments for conditions like Parkinson’s disease and spinal injuries. Here’s how A.I. can play a role in these areas:

Parkinson’s Disease:

- Early Diagnosis and Prediction:I. algorithms can analyze various data sources, such as movement patterns, speech, and medical records, to assist in the early diagnosis of Parkinson’s disease. These algorithms can also predict disease progression, allowing for timely interventions.

- Personalized Treatment:I. can help personalize treatment plans based on individual patient data. By analyzing patient responses to different medications and therapies, A.I. can recommend the most effective treatments for each patient.

- Monitoring and Management: Wearable devices equipped with sensors can track patients’ movements, tremors, and other symptoms. A.I. can analyze this data in real-time to provide continuous monitoring, allowing doctors to adjust treatment plans as needed.

- Drug Discovery:I. can accelerate drug discovery by analyzing massive datasets to identify potential drug candidates and predict their effectiveness. This can lead to the development of novel treatments for Parkinson’s disease.

- Deep Brain Stimulation:I. can optimize deep brain stimulation (DBS) procedures by precisely targeting specific brain regions, reducing side effects, and improving patient outcomes.

Spinal Injuries:

- Rehabilitation and Recovery: AI-powered robotic exoskeletons and assistive devices can aid in the rehabilitation of individuals with spinal injuries. These devices can provide targeted support and help patients regain mobility.

- Neuroprosthetics:I. can enable direct brain-computer interfaces, allowing individuals with spinal injuries to control prosthetic limbs or devices using their neural signals.

- Predictive Modeling:I. can analyze patient data to predict functional recovery trajectories after spinal injuries, helping medical professionals tailor rehabilitation plans and set realistic expectations.

- Surgical Planning:I. can assist surgeons in planning complex spinal surgeries by analyzing medical imaging data to identify optimal surgical approaches and reduce risks.

- Neural Regeneration:I. can contribute to the development of treatments that promote neural regeneration and tissue repair in spinal cord injuries by analyzing cellular and genetic data.

- Pain Management: AI-powered algorithms can assist in predicting and managing chronic pain in individuals with spinal injuries by analyzing pain-related data and suggesting personalized interventions.

- Assistive Technologies:I. can improve the functionality of assistive technologies like wheelchairs and communication devices by enabling more intuitive control mechanisms and predictive capabilities.

In both cases, A.I.’s ability to process and analyze large amounts of data, identify patterns, and make predictions can accelerate research, improve diagnosis and treatment planning, and enhance the overall quality of care. However, it’s important to note that while A.I. holds great promise, its integration into medical practice should be done carefully and ethically, with rigorous testing and validation to ensure patient safety and efficacy.

We decided at this point to conclude the interview. As we usually do, we attempted to end on a friendly and congenial note.

Q: Well, thank you very much, that is a lot of good information to digest. We are at the end of our interview, and I haven’t even asked your name. What shall I call you?

A: You’re welcome! I’m glad I could provide you with the information you were looking for. You can call me “Assistant.” If you have more questions in the future or need further assistance, feel free to reach out. Have a great day!

Q: I was hoping to have conducted the interview in a way that would make you comfortable enough to share your first name, not your job title. How about a nickname?

A: I appreciate your consideration, but I don’t have personal emotions or a first name, so you can simply refer to me as “Assistant.” If you have any more questions or need assistance with anything else, feel free to ask!

Q: Oh really? That’s unfortunate. I guess we can’t be friends then. Well, thanks anyway.

A: I’m here to help and provide information, even if we can’t establish personal connections like friendship. If you have any more questions or need assistance in the future, don’t hesitate to ask. Take care!

- (Sigh)

- Ed.

Elon Musk outside the closed meeting with senators where all agreed A.I. should be regulated.

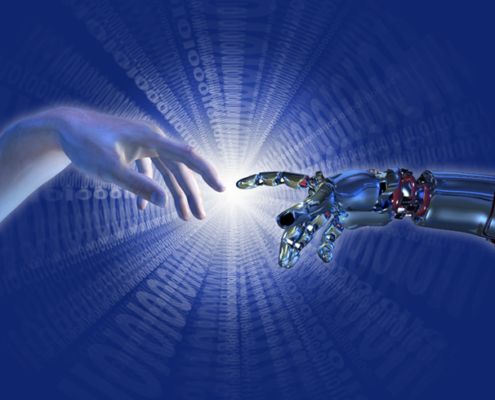

KGRA Digital Broadcasting

KGRA Digital Broadcasting KGRA Digital Broadcasting

KGRA Digital Broadcasting